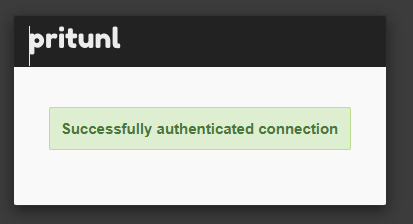

A Looong way back in the setup, I did manage to find a 500 error

[evening-waves-5326][2025-03-31 00:49:59,552][ERROR] Azure auth check request error

user_id = "<ID STRING>"

user_name = "CBailey"

status_code = 500

content = "b''"

Traceback (most recent call last):

File "/usr/lib/pritunl/usr/lib/python3.9/threading.py", line 937, in _bootstrap

self._bootstrap_inner()

File "/usr/lib/pritunl/usr/lib/python3.9/threading.py", line 980, in _bootstrap_inner

self.run()

File "/usr/lib/pritunl/usr/lib/python3.9/site-packages/cheroot/workers/threadpool.py", line 120, in run

keep_conn_open = conn.communicate()

File "/usr/lib/pritunl/usr/lib/python3.9/site-packages/cheroot/server.py", line 1287, in communicate

req.respond()

File "/usr/lib/pritunl/usr/lib/python3.9/site-packages/cheroot/server.py", line 1077, in respond

self.server.gateway(self).respond()

File "/usr/lib/pritunl/usr/lib/python3.9/site-packages/cheroot/wsgi.py", line 136, in respond

response = self.req.server.wsgi_app(self.env, self.start_response)

File "/usr/lib/pritunl/usr/lib/python3.9/site-packages/flask/app.py", line 2213, in __call__

return self.wsgi_app(environ, start_response)

File "/usr/lib/pritunl/usr/lib/python3.9/site-packages/flask/app.py", line 2190, in wsgi_app

response = self.full_dispatch_request()

File "/usr/lib/pritunl/usr/lib/python3.9/site-packages/flask/app.py", line 1484, in full_dispatch_request

rv = self.dispatch_request()

File "/usr/lib/pritunl/usr/lib/python3.9/site-packages/flask/app.py", line 1469, in dispatch_request

return self.ensure_sync(self.view_functions[rule.endpoint])(**view_args)

File "/usr/lib/pritunl/usr/lib/python3.9/site-packages/pritunl/auth/app.py", line 26, in _wrapped

return call(*args, **kwargs)

File "/usr/lib/pritunl/usr/lib/python3.9/site-packages/pritunl/handlers/key.py", line 1235, in key_wg_post

clients.connect_wg(

File "/usr/lib/pritunl/usr/lib/python3.9/site-packages/pritunl/clients/clients.py", line 1560, in connect_wg

auth.authenticate()

File "/usr/lib/pritunl/usr/lib/python3.9/site-packages/pritunl/authorizer/authorizer.py", line 151, in authenticate

self._check_call(self._check_sso)

File "/usr/lib/pritunl/usr/lib/python3.9/site-packages/pritunl/authorizer/authorizer.py", line 189, in _check_call

func()

File "/usr/lib/pritunl/usr/lib/python3.9/site-packages/pritunl/authorizer/authorizer.py", line 1178, in _check_sso

if not self.user.sso_auth_check(

File "/usr/lib/pritunl/usr/lib/python3.9/site-packages/pritunl/user/user.py", line 443, in sso_auth_check

logger.error('Azure auth check request error', 'user',

File "/usr/lib/pritunl/usr/lib/python3.9/site-packages/pritunl/logger/__init__.py", line 55, in error

kwargs['traceback'] = traceback.format_stack()

but even that is no longer presenting when a login attempt happens now.