Hi, the issue we are having is that clients (profiles) are connected to the server, but they cannot be reached over the vpn.

The clients can talk to each other (ping/ssh works)

The clients can connect ok to other pritunl servers on the same host and can be reached over the vpn.

We noticed the issue since a week in one of our test systems and it is not recoverable with purging and reinstalling pritunl-client and configuration.

The second client failed when we updated it’s client version.

The third when we restarted the client.

We have 100+ other clients connected to the server with the issue and it is worrying that it might happen to them also.

The clients are running pritunl-client with versions 1.3.3343.50-0ubuntu1~jammy and 1.3.3373.6-0ubuntu1~jammy. Also tested with pritunl-client-electron v1.3.3373.6

The host is running pritunl version v1.30.3343.47.

Client log:

2023-01-17 11:36:32 [{client_id}] Peer Connection Initiated with [AF_INET]{server_ip}:2001/n

2023-01-17 11:36:43 Data Channel: using negotiated cipher 'AES-128-GCM'/n

2023-01-17 11:36:43 Outgoing Data Channel: Cipher 'AES-128-GCM' initialized with 128 bit key/n

2023-01-17 11:36:43 Incoming Data Channel: Cipher 'AES-128-GCM' initialized with 128 bit key/n

2023-01-17 11:36:43 TUN/TAP device tun3 opened/n

2023-01-17 11:36:43 net_iface_mtu_set: mtu 1500 for tun3/n

2023-01-17 11:36:43 net_iface_up: set tun3 up/n

2023-01-17 11:36:43 net_addr_v4_add: 10.100.250.1/24 dev tun3/n

2023-01-17 11:36:43 /tmp/pritunl/bfad83e3986bcc9c-up.sh tun3 1500 1553 10.100.250.1 255.255.255.0 init/n

<14>Jan 17 11:36:43 bfad83e3986bcc9c-up.sh: Link 'tun3' coming up/n

<14>Jan 17 11:36:43 bfad83e3986bcc9c-up.sh: Adding IPv4 DNS Server 8.8.8.8/n

<14>Jan 17 11:36:43 bfad83e3986bcc9c-up.sh: SetLinkDNS(45 1 2 4 8 8 8 8)/n

2023-01-17 11:36:43 WARNING: this configuration may cache passwords in memory -- use the auth-nocache option to prevent this/n

2023-01-17 11:36:43 Initialization Sequence Completed/n

server log:

[] Tue Jan 17 10:36:32 2023 {client_ip}:43906 [{client_id}] Peer Connection Initiated with [AF_INET6]::ffff:{client_ip}:43906

[] 2023-01-17 10:36:34 COM> SUCCESS: client-kill command succeeded

[] 2023-01-17 10:36:34 User disconnected user_id={client_id}

[] 2023-01-17 10:36:34 COM> SUCCESS: client-auth command succeeded

[] Tue Jan 17 10:36:38 2023 MULTI_sva: pool returned IPv4={ip}, IPv6=(Not enabled)

[] 2023-01-17 10:36:38 User connected user_id={client_id}

[] Tue Jan 17 10:38:18 2023 {pub_ip}:23229 peer info: IV_VER=2.5.5

[] Tue Jan 17 10:38:18 2023 {pub_ip}:23229 peer info: IV_PLAT=linux

[] Tue Jan 17 10:38:18 2023 {pub_ip}:23229 peer info: IV_PROTO=6

[] Tue Jan 17 10:38:18 2023 {pub_ip}:23229 peer info: IV_NCP=2

[] Tue Jan 17 10:38:18 2023 {pub_ip}:23229 peer info: IV_CIPHERS=AES-256-GCM:AES-128-GCM:AES-128-CBC

[] Tue Jan 17 10:38:18 2023 {pub_ip}:23229 peer info: IV_LZ4=1

[] Tue Jan 17 10:38:18 2023 {pub_ip}:23229 peer info: IV_LZ4v2=1

[] Tue Jan 17 10:38:18 2023 {pub_ip}:23229 peer info: IV_LZO=1

[] Tue Jan 17 10:38:18 2023 {pub_ip}:23229 peer info: IV_COMP_STUB=1

[] Tue Jan 17 10:38:18 2023 {pub_ip}:23229 peer info: IV_COMP_STUBv2=1

[] Tue Jan 17 10:38:18 2023 {pub_ip}:23229 peer info: IV_TCPNL=1

[] Tue Jan 17 10:38:18 2023 {pub_ip}:23229 peer info: IV_HWADDR={mac}

[] Tue Jan 17 10:38:18 2023 {pub_ip}:23229 peer info: IV_SSL=OpenSSL_3.0.2_15_Mar_2022

[] Tue Jan 17 10:38:18 2023 {pub_ip}:23229 peer info: UV_ID={id}

[] Tue Jan 17 10:38:18 2023 {pub_ip}:23229 peer info: UV_NAME=autumn-waters-9754

[] Tue Jan 17 10:38:19 2023 {pub_ip}:23229 [{client_id}] Peer Connection Initiated with [AF_INET6]::ffff:{pub_ip}:23229

[] 2023-01-17 10:38:20 COM> SUCCESS: client-kill command succeeded

[] 2023-01-17 10:38:20 User disconnected user_id={client_id}

[] 2023-01-17 10:38:20 COM> SUCCESS: client-auth command succeeded

[] Tue Jan 17 10:38:21 2023 MULTI_sva: pool returned IPv4={ip}, IPv6=(Not enabled)

[] 2023-01-17 10:38:21 User connected user_id={client_id}

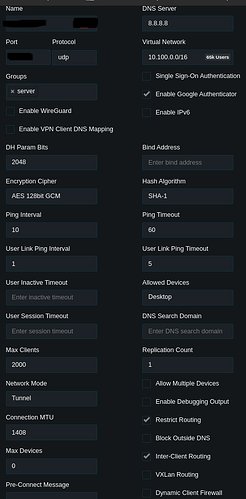

server configuration

- The only change on the server recently was the change of the

allowed devicesfromanytodesktop.

As this requires a restart of the server to be applied we don’t think that it is causing the issue

Can you please advise what we can do to troubleshoot further?