In fact, we disabled the permissions for pritunl manage route tables (using IAM role) because we manage using Terraform, but all routes is ok.

Notes:

- when we connect to our pritunl server machine or any other machine in network, we can ping 192.168.x.x machines

- when we ping any 192.168.x.x from our local machine, the communication fails

Therefore, the communication inside network is ok, but not for devices out of network when using pritunl client

– Logs –

177.xx.1.xx = public IP of 10.22.235.10 (machine running on-site managed by meraki) (working)

201.xx.230.xx = public IP of 192.168.0.38 (machine running on-site managed by meraki) (not working)

100.xx.147.xx and 100.xx.149.xx = Public IP of 10.102.14.173 and 10.105.62.154 (machines running on aws cloud)

Not working (192.168.x.x)

IPsec status log:

Listening IP addresses:

192.168.0.38

Connections:

66b3e6c205ffd665676886e5-66a17d2074319c98b759aee3-44c61f8fea3f6da8437cccdcf9f415d2_00000000: %any...100.xx.149.xx IKEv2, dpddelay=5s

66b3e6c205ffd665676886e5-66a17d2074319c98b759aee3-44c61f8fea3f6da8437cccdcf9f415d2_00000000: local: [201.xx.230.xx] uses pre-shared key authentication

66b3e6c205ffd665676886e5-66a17d2074319c98b759aee3-44c61f8fea3f6da8437cccdcf9f415d2_00000000: remote: [100.xx.149.xx] uses pre-shared key authentication

66b3e6c205ffd665676886e5-66a17d2074319c98b759aee3-44c61f8fea3f6da8437cccdcf9f415d2_00000000: child: 192.168.0.0/22 === 10.105.0.0/16 TUNNEL, dpdaction=start

66b3e6c205ffd665676886e5-66a9150705ffd66567576ea1-a542cd417947125e4288b1a37640bfbe_00000000: %any...100.xx.147.xx IKEv2, dpddelay=5s

66b3e6c205ffd665676886e5-66a9150705ffd66567576ea1-a542cd417947125e4288b1a37640bfbe_00000000: local: [201.xx.230.xx] uses pre-shared key authentication

66b3e6c205ffd665676886e5-66a9150705ffd66567576ea1-a542cd417947125e4288b1a37640bfbe_00000000: remote: [100.xx.147.xx] uses pre-shared key authentication

66b3e6c205ffd665676886e5-66a9150705ffd66567576ea1-a542cd417947125e4288b1a37640bfbe_00000000: child: 192.168.0.0/22 === 10.102.0.0/16 TUNNEL, dpdaction=start

66b3e6c205ffd665676886e5-66a265c174319c98b75b02a0-e1eed26f96a15a03b9e842a6f66d3829_00000035: %any...177.xx.1.xx IKEv2, dpddelay=5s

66b3e6c205ffd665676886e5-66a265c174319c98b75b02a0-e1eed26f96a15a03b9e842a6f66d3829_00000035: local: [201.xx.230.xx] uses pre-shared key authentication

66b3e6c205ffd665676886e5-66a265c174319c98b75b02a0-e1eed26f96a15a03b9e842a6f66d3829_00000035: remote: [177.xx.1.xx] uses pre-shared key authentication

66b3e6c205ffd665676886e5-66a265c174319c98b75b02a0-e1eed26f96a15a03b9e842a6f66d3829_00000035: child: 192.168.0.0/22 === 10.22.235.0/24 TUNNEL, dpdaction=start

Journal log:

set 11 10:38:20 xxxxxx.com.br pritunl-link[164353]: cloud: Failed to get AWS region

set 11 10:38:20 xxxxxx.com.br pritunl-link[164353]: operation error ec2imds: GetRegion, request canceled, context deadline exceeded

set 11 10:38:20 xxxxxx.com.br pritunl-link[164353]: ORIGINAL STACK TRACE:

set 11 10:38:20 xxxxxx.com.br pritunl-link[164353]: github.com/pritunl/pritunl-link/advertise.awsGetMetaData

set 11 10:38:20 xxxxxx.com.br pritunl-link[164353]: /go/src/github.com/pritunl/pritunl-link/advertise/aws.go:87 +0xc9c09b

set 11 10:38:20 xxxxxx.com.br pritunl-link[164353]: github.com/pritunl/pritunl-link/advertise.AwsAddRoute

set 11 10:38:20 xxxxxx.com.br pritunl-link[164353]: /go/src/github.com/pritunl/pritunl-link/advertise/aws.go:250 +0xc9cfa9

set 11 10:38:20 xxxxxx.com.br pritunl-link[164353]: github.com/pritunl/pritunl-link/advertise.Routes

set 11 10:38:20 xxxxxx.com.br pritunl-link[164353]: /go/src/github.com/pritunl/pritunl-link/advertise/advertise.go:129 +0xc9b32f

set 11 10:38:20 xxxxxx.com.br pritunl-link[164353]: github.com/pritunl/pritunl-link/ipsec.deploy

set 11 10:38:20 xxxxxx.com.br pritunl-link[164353]: /go/src/github.com/pritunl/pritunl-link/ipsec/ipsec.go:564 +0xcb9e04

set 11 10:38:20 xxxxxx.com.br pritunl-link[164353]: github.com/pritunl/pritunl-link/ipsec.runDeploy

set 11 10:38:20 xxxxxx.com.br pritunl-link[164353]: /go/src/github.com/pritunl/pritunl-link/ipsec/ipsec.go:674 +0xcbaa6a

set 11 10:38:20 xxxxxx.com.br pritunl-link[164353]: runtime.goexit

set 11 10:38:20 xxxxxx.com.br pritunl-link[164353]: /usr/local/go/src/runtime/asm_amd64.s:1650 +0x46ec20

set 11 10:38:22 xxxxxx.com.br charon[164413]: 08[IKE] sending DPD request

set 11 10:38:22 xxxxxx.com.br charon[164413]: 08[ENC] generating INFORMATIONAL request 1381 [ ]

set 11 10:38:22 xxxxxx.com.br charon[164413]: 08[NET] sending packet: from 192.168.0.38[4500] to 177.xx.1.xx[38855] (80 bytes)

set 11 10:38:22 xxxxxx.com.br charon[164413]: 14[NET] received packet: from 177.xx.1.xx[38855] to 192.168.0.38[4500] (80 bytes)

set 11 10:38:22 xxxxxx.com.br charon[164413]: 14[ENC] parsed INFORMATIONAL response 1381 [ ]

set 11 10:38:23 xxxxxx.com.br pritunl-link[164353]: [2024-09-11 10:38:23][INFO] ▶ state: Deploying state ◆ public_address="201.xx.230.xx" ◆ address6="" ◆ states_len=1 ◆ default_>

set 11 10:38:23 xxxxxx.com.br charon[164413]: 16[IKE] retransmit 5 of request with message ID 0

set 11 10:38:23 xxxxxx.com.br charon[164413]: 16[NET] sending packet: from 192.168.0.38[500] to 177.xx.1.xx[500] (1924 bytes)

set 11 10:38:23 xxxxxx.com.br charon[164413]: 06[CFG] rereading secrets

set 11 10:38:23 xxxxxx.com.br charon[164413]: 06[CFG] loading secrets from '/etc/ipsec.secrets'

set 11 10:38:23 xxxxxx.com.br charon[164413]: 06[CFG] loaded IKE secret for 201.xx.230.xx 100.xx.149.xx

set 11 10:38:23 xxxxxx.com.br charon[164413]: 06[CFG] loaded IKE secret for 201.xx.230.xx 177.xx.1.xx

set 11 10:38:23 xxxxxx.com.br charon[164413]: 06[CFG] loaded IKE secret for 201.xx.230.xx 100.xx.147.xx

set 11 10:38:23 xxxxxx.com.br charon[164413]: 06[CFG] rereading ca certificates from '/etc/ipsec.d/cacerts'

set 11 10:38:23 xxxxxx.com.br charon[164413]: 06[CFG] rereading aa certificates from '/etc/ipsec.d/aacerts'

set 11 10:38:23 xxxxxx.com.br charon[164413]: 06[CFG] rereading ocsp signer certificates from '/etc/ipsec.d/ocspcerts'

set 11 10:38:23 xxxxxx.com.br charon[164413]: 06[CFG] rereading attribute certificates from '/etc/ipsec.d/acerts'

set 11 10:38:23 xxxxxx.com.br charon[164413]: 06[CFG] rereading crls from '/etc/ipsec.d/crls'

set 11 10:38:23 xxxxxx.com.br pritunl-link[2260577]: Updating strongSwan IPsec configuration...

set 11 10:38:24 xxxxxx.com.br charon[164413]: 16[IKE] sending DPD request

set 11 10:38:24 xxxxxx.com.br charon[164413]: 16[ENC] generating INFORMATIONAL request 1668 [ ]

set 11 10:38:24 xxxxxx.com.br charon[164413]: 16[NET] sending packet: from 192.168.0.38[4500] to 100.xx.149.xx[4500] (80 bytes)

set 11 10:38:24 xxxxxx.com.br charon[164413]: 06[NET] received packet: from 100.xx.149.xx[4500] to 192.168.0.38[4500] (80 bytes)

set 11 10:38:24 xxxxxx.com.br charon[164413]: 06[ENC] parsed INFORMATIONAL response 1668 [ ]

set 11 10:38:27 xxxxxx.com.br charon[164413]: 04[IKE] sending DPD request

set 11 10:38:27 xxxxxx.com.br charon[164413]: 04[ENC] generating INFORMATIONAL request 1382 [ ]

set 11 10:38:27 xxxxxx.com.br charon[164413]: 04[NET] sending packet: from 192.168.0.38[4500] to 177.xx.1.xx[38855] (80 bytes)

set 11 10:38:27 xxxxxx.com.br charon[164413]: 16[NET] received packet: from 177.xx.1.xx[38855] to 192.168.0.38[4500] (80 bytes)

set 11 10:38:27 xxxxxx.com.br charon[164413]: 16[ENC] parsed INFORMATIONAL response 1382 [ ]

Working (10.22.x.x)

IPsec status log:

Listening IP addresses:

10.22.235.10

Connections:

66b3e79405ffd6656768894b-66a9150705ffd66567576ea1-029f63aafbbcdbb60f3f0f4e27b96db1_00000000: %any...100.xx.147.xx IKEv2, dpddelay=5s

66b3e79405ffd6656768894b-66a9150705ffd66567576ea1-029f63aafbbcdbb60f3f0f4e27b96db1_00000000: local: [177.xx.1.xx] uses pre-shared key authentication

66b3e79405ffd6656768894b-66a9150705ffd66567576ea1-029f63aafbbcdbb60f3f0f4e27b96db1_00000000: remote: [100.xx.147.xx] uses pre-shared key authentication

66b3e79405ffd6656768894b-66a9150705ffd66567576ea1-029f63aafbbcdbb60f3f0f4e27b96db1_00000000: child: 10.22.235.0/24 === 10.102.0.0/16 TUNNEL, dpdaction=start

66b3e79405ffd6656768894b-66a17d2074319c98b759aee3-23b9746fc7d6908674d94d57aff74814_00000000: %any...100.xx.149.xx IKEv2, dpddelay=5s

66b3e79405ffd6656768894b-66a17d2074319c98b759aee3-23b9746fc7d6908674d94d57aff74814_00000000: local: [177.xx.1.xx] uses pre-shared key authentication

66b3e79405ffd6656768894b-66a17d2074319c98b759aee3-23b9746fc7d6908674d94d57aff74814_00000000: remote: [100.xx.149.xx] uses pre-shared key authentication

66b3e79405ffd6656768894b-66a17d2074319c98b759aee3-23b9746fc7d6908674d94d57aff74814_00000000: child: 10.22.235.0/24 === 10.105.0.0/16 TUNNEL, dpdaction=start

66b3e79405ffd6656768894b-66a17d2574319c98b759aee9-04b6fea299fd8485b13152228117b8f4_00000284: %any...201.xx.230.xx IKEv2, dpddelay=5s

66b3e79405ffd6656768894b-66a17d2574319c98b759aee9-04b6fea299fd8485b13152228117b8f4_00000284: local: [177.xx.1.xx] uses pre-shared key authentication

66b3e79405ffd6656768894b-66a17d2574319c98b759aee9-04b6fea299fd8485b13152228117b8f4_00000284: remote: [201.xx.230.xx] uses pre-shared key authentication

66b3e79405ffd6656768894b-66a17d2574319c98b759aee9-04b6fea299fd8485b13152228117b8f4_00000284: child: 10.22.235.0/24 === 192.168.0.0/22 TUNNEL, dpdaction=start

Inside machine with connection issue (192.168.0.38) the nmcli output

[cloud@******* ~]$ nmcli

ens192: conectado para ens192

"VMware VMXNET3"

ethernet (vmxnet3), 00:50:56:BB:61:0A, hw, mtu 1500

padrão ip4

inet4 192.168.0.38/22

route4 default via 192.168.0.5 metric 100

route4 192.168.0.0/22 metric 0

route4 10.102.0.0/16 via 192.168.0.5 metric 0

route4 10.105.0.0/16 via 192.168.0.5 metric 0 <------ pritunl clients cidr block

route4 10.22.235.0/24 via 192.168.0.5 metric 0

inet6 fe80::250:56ff:febb:610a/64

route6 fe80::/64 metric 1024

My machine

tun0: connected (externally) para tun0

"tun0"

tun, sw, mtu 1500

inet4 10.105.56.21/21

route4 10.105.56.0/21 metric 0

route4 192.168.0.0/22 via 10.105.56.1 metric 0 <------ connection issue cidr block

route4 10.10.0.0/16 via 10.105.56.1 metric 0

route4 10.100.0.0/16 via 10.105.56.1 metric 0

route4 10.102.0.0/16 via 10.105.56.1 metric 0

route4 10.105.0.0/16 via 10.105.56.1 metric 0

route4 10.105.0.2/32 via 10.105.56.1 metric 0

route4 10.108.0.0/16 via 10.105.56.1 metric 0

route4 10.22.235.0/24 via 10.105.56.1 metric 0

route4 10.22.235.17/32 via 10.105.56.1 metric 0

route4 ... more

inet6 fe80::d219:66ec:aa15:828c/64

route6 fe80::/64 metric 256

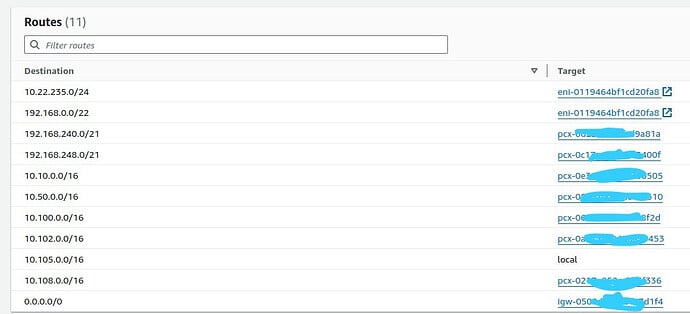

AWS Route table